Favyen Bastani, Sam Madden. ICCV 2021.

Links: [Paper] [Talk] [Slides]

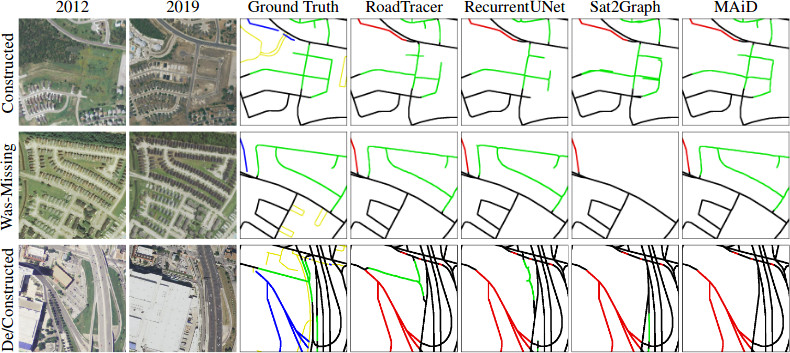

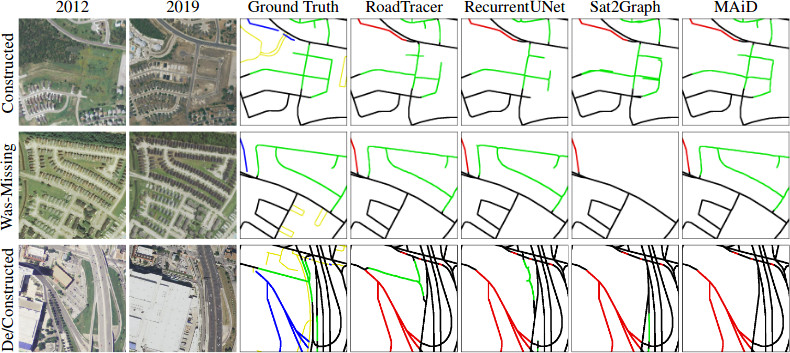

Summary: MUNO21 is a dataset and benchmark for machine learning methods that automatically update and maintain digital street map datasets. Previous datasets focus on road extraction, and measure how well a method can infer a road network from aerial or satellite imagery. In contrast, MUNO21 measures how well a method can modify the road network data in an existing digital map dataset to make it reflect the latest physical road network visible from imagery. This task is more practical, since it doesn't throw away the existing map, but also more challenging, as physical roads may be constructed, bulldozed, or otherwise modified.

Get started: To get started with the dataset, obtain the code and dataset using the links above. The git repository includes instructions for setting up the dataset, using baseline methods to infer road networks, post-processing road networks with map fusion (when needed), and computing evaluation metrics.

Cities: MUNO21 includes imagery and road network data from 21 cities in the US: Atlanta, Austin, Baltimore, Boston, Chicago, Dallas, DC, Denver, Detroit, Houstan, Los Angeles, Miami, New Orleans, New York, Philadelphia, Phoenix, Pittsburgh, San Antonio, Seattle, San Francisco, and Las Vegas. We focus on the US due to the availability of high-resolution public domain aerial imagery.

Imagery: MUNO21 includes 1-meter-per-pixel aerial imagery obtained from NAIP in the naip/jpg/ folder. Imagery captured at four timestamps between 2012 and 2019 is included. Each image file covers approximately 100 sq km. In some cities, the dataset spans 200 sq km, in which case images are divided into two tiles (named _0_0 and _1_0).

Road network data: Road network data is available in the graphs/ folder, and available either in a simple .graph format or raw OSM PBF format. It comes from OpenStreetMap (OSM); the latter format includes road metadata that could be useful. The OSM state at eight yearly timestamps between 2012 and 2019 is included.

Task: The annotations.json file specifies the 1,294 map update scenarios. Let annotation refer to one annotation object. In each scenario, a map update method should input road network data from 2013 (e.g. atlanta_0_0_2013-07-01.graph and atlanta_0_0_2013-07-01_all.graph and/or atlanta_2013-07-01.pbf), along with imagery data from any or all years, and produce an updated road network that reflects the physical roads visible in the most recent aerial imagery.

Each scenario specifies a spatial window in pixel coordinates where the map has changed (annotation['Cluster']['Window']). A method may use imagery and road network data outside that window, but its output road network should span that window plus 128-pixel padding; it will be evaluated only inside the window (with no padding). The padding ensures that the evaluation metrics are computed correctly along the boundary of the window.

Methods will be evaluated in terms of how well the updated road networks correspond to the most recent road network data. See the paper for details. There are three metrics included in go/metrics/: PixelF1 (geo.go) and APLS (apls.py) are two alternatives for measuring recall, and error rate (error_rate.go) measures precision.

A method may expose a single real-valued parameter that provides a tradeoff between precision and recall. For example, a method that infers road networks using image segmentation may expose the segmentation probability confidence threshold for the "road" class as a parameter -- increasing this threshold generally provides higher precision but lower recall. Methods are compared in terms of their precision-recall curves.