Favyen Bastani, Songtao He, Arjun Balasingam, Karthik Gopalakrishnan, Mohammad Alizadeh, Hari Balakrishnan, Michael Cafarella, Tim Kraska, and Sam Madden. SIGMOD 2020.

Links: [PDF] [Code] [YTStream Dataset] [Talk] [Slides]

Summary: MIRIS is a video query processing optimization for object track queries, which apply predicates on object tracks. The optimization incorporates query planning into a variable framerate object tracker to minimize the number of frames that the tracker must process while still producing accurate query outputs.

Abstract: Video databases enable efficient, automated analysis of video by executing expressive queries. In particular, video queries with object track predicates are useful in a wide range of applications, and include selecting objects that move from one region of the camera frame to another (e.g., find cars that turn right through a junction) and selecting objects with certain speeds (e.g., find animals that stop to drink water from a lake). However, these predicates also present new challenges, as they involve the movement of an object over several successive frames. We propose a novel query-driven tracking approach that integrates query processing with object tracking to efficiently process object track queries and address the computational complexity of object detection methods. By processing video at low framerates when possible, but increasing the framerate when needed to ensure high-accuracy on a query, our approach substantially speeds up query execution. We implement query-driven tracking in a video query processor that we call MIRIS, and compare MIRIS against four baselines on a diverse dataset consisting of five sources of video and nine distinct queries. We find that, at the same accuracy, MIRIS accelerates video query processing by as much as 17x over the overlap-based tracking-by-detection method used in existing video database systems.

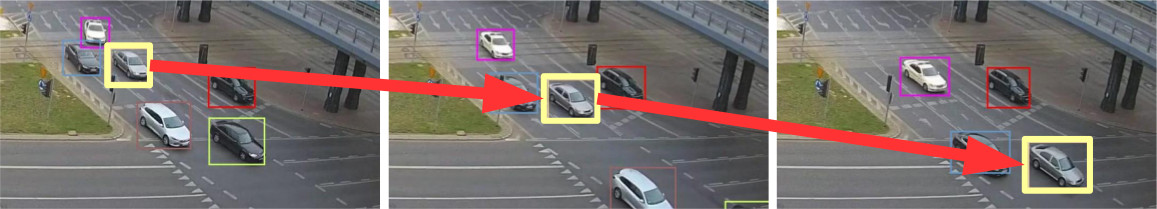

What is an object track? An object detection is the polygon position of an object instance in one image (video frame), and an object track is the sequence of positions where an object appears over a segment of video.

Technical Overview:

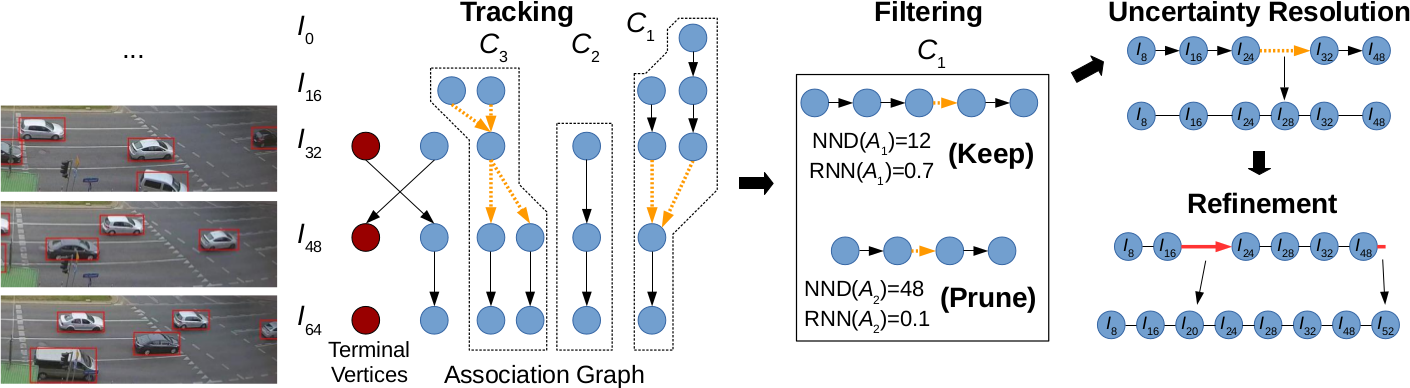

Our variable framerate tracker exposes several parameters that can be selected by the query planner to optimize the execution of a specific query.

First, the tracker processes video at a low initial sampling framerate (e.g. 1 frame per second of video), and produces an assocation graph representing the identified object tracks. The tracker may sometimes have low confidence in a tracking decision due to the low sampling rate: given a detection in one frame, it may not be confident which of several detections in another frame the first detection matches to. In these cases, it produces the nondeterministic orange edges above.

Second, filtering eliminates connected components of tracks (tracks that share nondeterministic edges) that MIRIS is confident will not satisfy the query predicate. This will speed up the next two steps, allowing them to skip irrelevant tracks.

Third, uncertainty resolution determines which nondeterministic edges were correct by processing additional video frames at higher framerates. The tracker should have higher confidence when processing frames that are closer together in time.

Fourth, refinement processes yet more video frames to ensure that tracks are captured at a fine enough granularity so that the predicate can be accurately evaluated over the tracks.

Finally, we evaluate the predicate over the refined tracks, and output the tracks that satisfy the predicate.